Early on in the days of learning Cocoa, I remember coming across a situation where I had a bunch of integers that I needed to keep around, but wasn’t immediately sure about how to go about doing that using an NSArray. As is quickly made evident by the documentation, Cocoa collections pretty much all require their values and keys to be objective-c objects, which an integer (int, NSInteger, or NSUInteger) is not.

- (void)addObject:(id)anObject;

- (void)setValue:(id)value forKey:(NSString *)key;

Based on the plethora of Google results on the topic, it’s obvious that I’m not the only one who’s run into this situation; Sadly, nearly all of them indicate that, because collections require objects, the only solution is to wrap your integers with NSNumber. I’m writing this blog post to let you know that there ARE other ways.

(This post got a little long winded – if you don’t care about the academic conversation, go ahead and just skip to the code.)

Why You Should Care: NSNumber Comes With a Cost

Let’s start with why using NSNumbers might not be your best option: Objects are more expensive than scalars. In a nutshell, that’s all there really is to it; NSNumbers are often unnecessarily heavy for the job required of them. Objects require more memory to create than primitives, which in turn requires more CPU cycles to allocate. Another object cost, albeit a much smaller one, is the Objective-C dispatching required for the method calls needed to retrieve and compare basic values of an NSNumber.

Compare the following snippet of code: we are iterating through a loop 1 million times, and assigning our loop counter to a variable with two very different methods – using NSNumbers vs. using NSIntegers. (Logging code removed for brevity)

NSNumber *numberValue;

NSInteger intValue;

for (NSInteger i = 0; i < collectionSize; i++) {

NSNumber *number = [NSNumber numberWithInt:i];

numberValue = number;

}

for (NSInteger i = 0; i < collectionSize; i++) {

intValue = i;

}

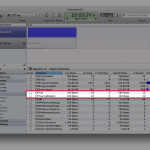

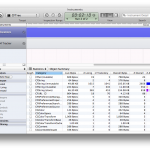

// 2.8 GHz i7 iMac

NSNumber 0.20776 Seconds

NSInteger 0.00208 Seconds

// iPad 1

NSNumber 3.76227 Seconds

NSInteger 0.00952 Seconds

Woah, assigning 1 million Integers is 100 times faster than creating and assigning 1 million NSNumbers on an iMac, and nearly 400 times faster on an iPad! In the land of performance optimization, a 100-400x improvement is almost always a win, even if it involves a small amount of extra code complexity.

A Cocoa Flavored Layer Cake

One of the most amazing (and simultaneously intimidating) parts of being a iOS/Mac developer is that for any particular problem, there exists a schmorgesborg of API ranging from high-level libraries like Foundation, down to straight C. For this occasion, our solution lies in the not-so-scary land that sits comfortably between Foundation, and C: Core Foundation. Technically, Core Foundation IS a C API, but a lot of the nitty gritty details of C have been abstracted away. When it comes to collections, this abstraction relieves us from needing to think about things like dynamically growing memory for an object with a capacity of an unknown length.

Anyone familiar with using Foundation should have very little trouble understanding Core Foundation, as much of the API is nearly identical – with the exception that it is C, and procedural based. In fact, the two are so closely related that many of the equivalent classes (e.g. NSArray/CFArray) only need to be typecast before they can be used interchangeably (this is called Toll-Free Bridging, and is something we have planned for a future article).

Below is an example that creates mutable instances of a CFDictionary and a CFArray.

CFMutableArrayRef array;

CFMutableDictionaryRef dictionary;

array = CFArrayCreateMutable(NULL, 0, &kCFTypeArrayCallBacks);

dictionary = CFDictionaryCreateMutable(NULL, 0, &kCFTypeDictionaryKeyCallBacks, &kCFTypeDictionaryValueCallBacks);

NSString *aKey = @"ultrajoke";

NSString *aString = @"jerry";

CFArrayAppendValue(array, aString);

CFDictionarySetValue(dictionary, aKey, aString);

If you’ve been loving life in the realm of UIKit and Foundation, and haven’t spent any time with Core Foundation or any of the lower level API – it’s possible your head just went spinning as a result of this vastly different looking code. Trust me, it’s not so bad.

- CFArrayCreateMutable and CFDictionaryCreateMutable – This is C, those are just the function names.

- NULL – NULL is being passed for the allocator argument, and is the same as using kCFAllocatorDefault. This is something we can dive into more another time, but you know how in Objective-C you see things like [[MyClass alloc] init]? This is kinda like the alloc part. The important thing to know is that this argument impacts how memory is allocated, and you’re probably always going to want to use NULL.

- 0 – This is just the capacity, the docs tell us that 0 means these collections will grow their capacity (and memory) as needed.

- &kCFTypeArrayCallBacks, etc – These are pointers to structs of callback functions used for the values/keys, and are the kingpin of this whole article; more on them in a moment.

It’s All About The Callbacks

The sole reason we’ve moved to Core Foundation is that the functions for creating collection objects give us greater control over what happens when things are added and removed (notice I said things, not objects). This control is given by way of the callbacks we mentioned earlier; They vary depending on the collection type, but all of them fall into one of the 5 following basic types.

- Retain Callback – Function called when a value is added to the array or dictionary, as a value or key.

- Release Callback – Function called when a value is removed from the array or dictionary, as a value or key.

- Copy Description Callback – Function called to get the description of a value. (Remember descriptions from ourprevious post?)

- Equal Callback – Function called to determine if one value is equal to another

- Hash Callback – Function used to calculate a hash for keys in a dictionary

Of the five types of callbacks, two sound very “object-y” in nature: Retain and Release. In fact, these are the two that need to change if we want to store integers in our collections; Integers aren’t objects, and don’t know anything about retain counts. According to the documentation for CFArrayCallBacks, CFDictionaryKeyCallBacks and CFDictionaryValueCallBacks, passing NULL to the retain and release callbacks results in the collection simply not retaining/releasing those values (or keys). What if we pass NULL to the other callback types? Again we turn to the documentation, and we find that they all have default behaviors that are used when NULL is provided. Description creates a simple description, Equal uses pointer equality, and hash is derived by converting the pointer into a integer.

If you’ve trudged all the way through this long winded post, you’re probably starting to see where I’m going with this, so let’s look at some code.

The Code

// Non Retained Array and Dictionary

CFMutableArrayRef intArray = CFArrayCreateMutable(NULL, 0, NULL);

CFMutableDictionaryRef intDict = CFDictionaryCreateMutable(NULL, 0, NULL, NULL);

// Dictionary With Non Retained Keys and Object Values

CFMutableDictionaryRef intObjDict = CFDictionaryCreateMutable(NULL, 0, NULL, &kCFTypeDictionaryValueCallBacks);

// Setting values

CFArrayAppendValue(intArray, (void *)79);

CFDictionarySetValue(intDict, (void *)5, (void *)10);

CFDictionarySetValue(intObjDict, (void *)5, @"ultrajoke");

// Getting values

NSInteger arrayInt = (NSInteger)CFArrayGetValueAtIndex(intCFArray, 0);

NSInteger dictInt = (NSInteger)CFDictionaryGetValue(intDict, (void *)5);

NSString *dictString = (NSString *)CFDictionaryGetValue(intObjDict, (void *)5);

CFRelease(intArray);

intArray = NULL;

CFRelease(intDict);

intDict = NULL;

CFRelease(intObjDict);

intObjDict = NULL;

Yeah, that’s really all there is to it; we simply pass NULL for the callback pointers, which prevents the collections from trying to call retain/release on the values assigned to it. It’s worth pointing out that there are some caveats to be aware of:

- intArray and intDict are blindly storing pointer sized values, including pointers to objects, integers and booleans – nothing is retained/released.

- The equal method for intArray and intDict uses “pointer comparison”, which is essentially the direct value that was stored. This means that while you can get away with storing a pointer to an object (that will not be retained), equality is determined by only the memory address.

- Because the intObjDict Dictionary uses kCFTypeDictionaryValueCallBacks it’s values MUST be objects (either CFType or NSObject)